Hold onto your hats, folks! Kunlun AI, the powerhouse behind some seriously impressive tech, just dropped a bomb on the AI world. Following their initial foray into Chinese language reasoning with Skywork-o1, their TianGong team has unveiled Skywork-OR1, a new series of open-source models boasting 7B and 32B parameter sizes.

Let’s be clear: this isn’t just another release. Kunlun claims, and early reports suggest, that Skywork-OR1 delivers leading-edge reasoning performance for its size, effectively tackling the frustrating limitations we’ve seen in larger models when it comes to complex logic and problem-solving. Finally, a model that doesn’t just sound smart – it actually is.

And here’s the kicker: it’s completely open and free to use. That’s right. No licensing fees, no hidden agendas. Kunlun is putting this serious tech into the hands of developers and researchers everywhere. This is a huge win for innovation and a direct challenge to the closed-garden approach of some of the bigger players.

Diving Deeper: Understanding Reasoning Models

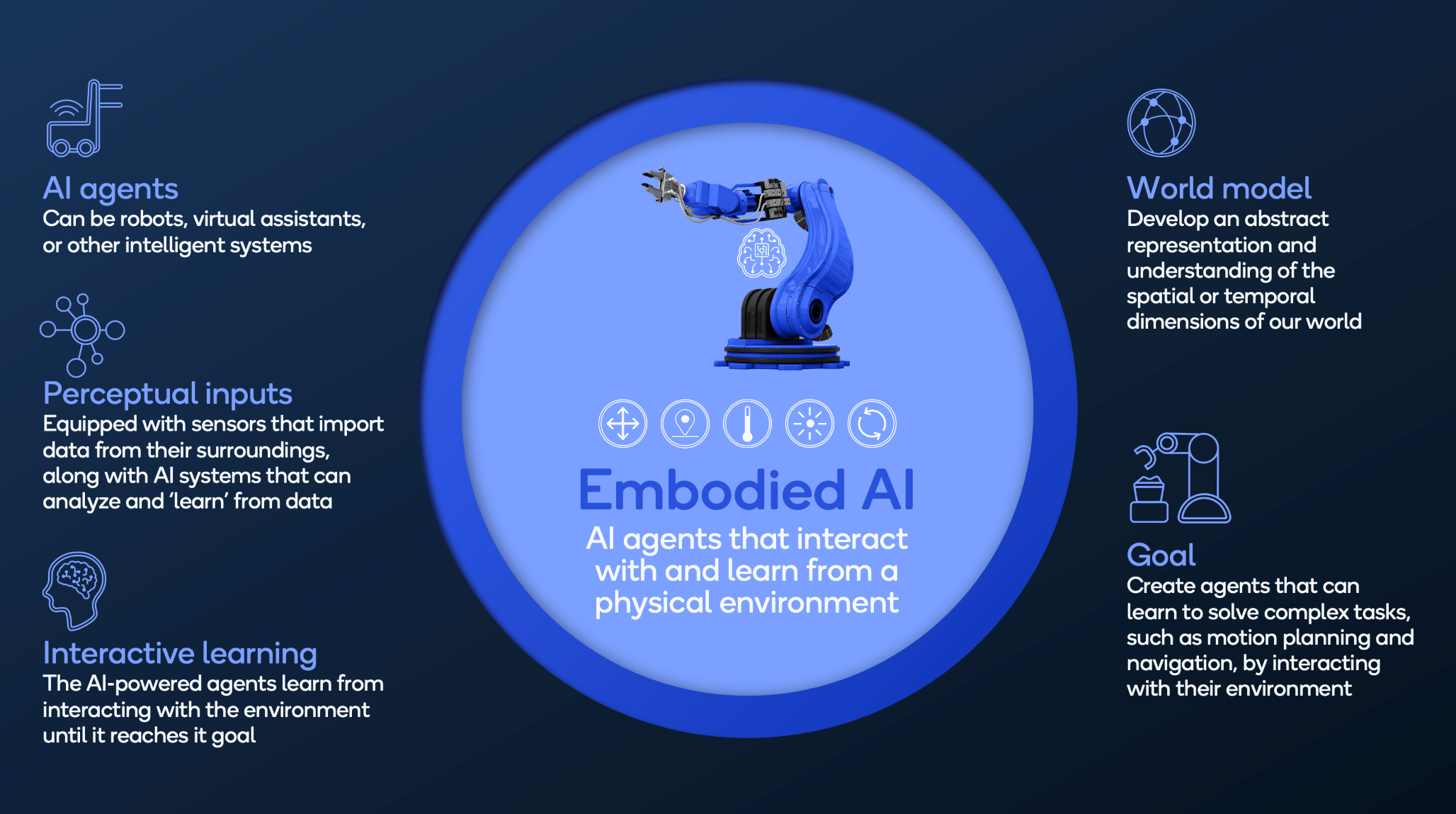

Reasoning models are a crucial evolution in the AI space. They go beyond simply identifying patterns in data. Instead, they’re designed to understand relationships, draw conclusions, and solve problems in a way that mimics human thought.

Larger parameter models traditionally dominated reasoning tasks, but they come with massive computational costs. Skywork-OR1 demonstrates that through smart architecture and focused training, you can achieve exceptional results with more efficient models.

These models aren’t just about abstract logic. They have real-world applications in areas like financial analysis, legal reasoning, scientific discovery and fraud detection. Improved reasoning abilities mean better outcomes across the board.

This release is particularly significant because of the open-source nature. It allows for community contributions, faster development, and ultimately, a more robust and reliable AI ecosystem. Kunlun AI is taking a bold step, and I, for one, am excited to see where this goes.